Google Removes Robots.txt Guidance For Blocking Auto-Translated Pages

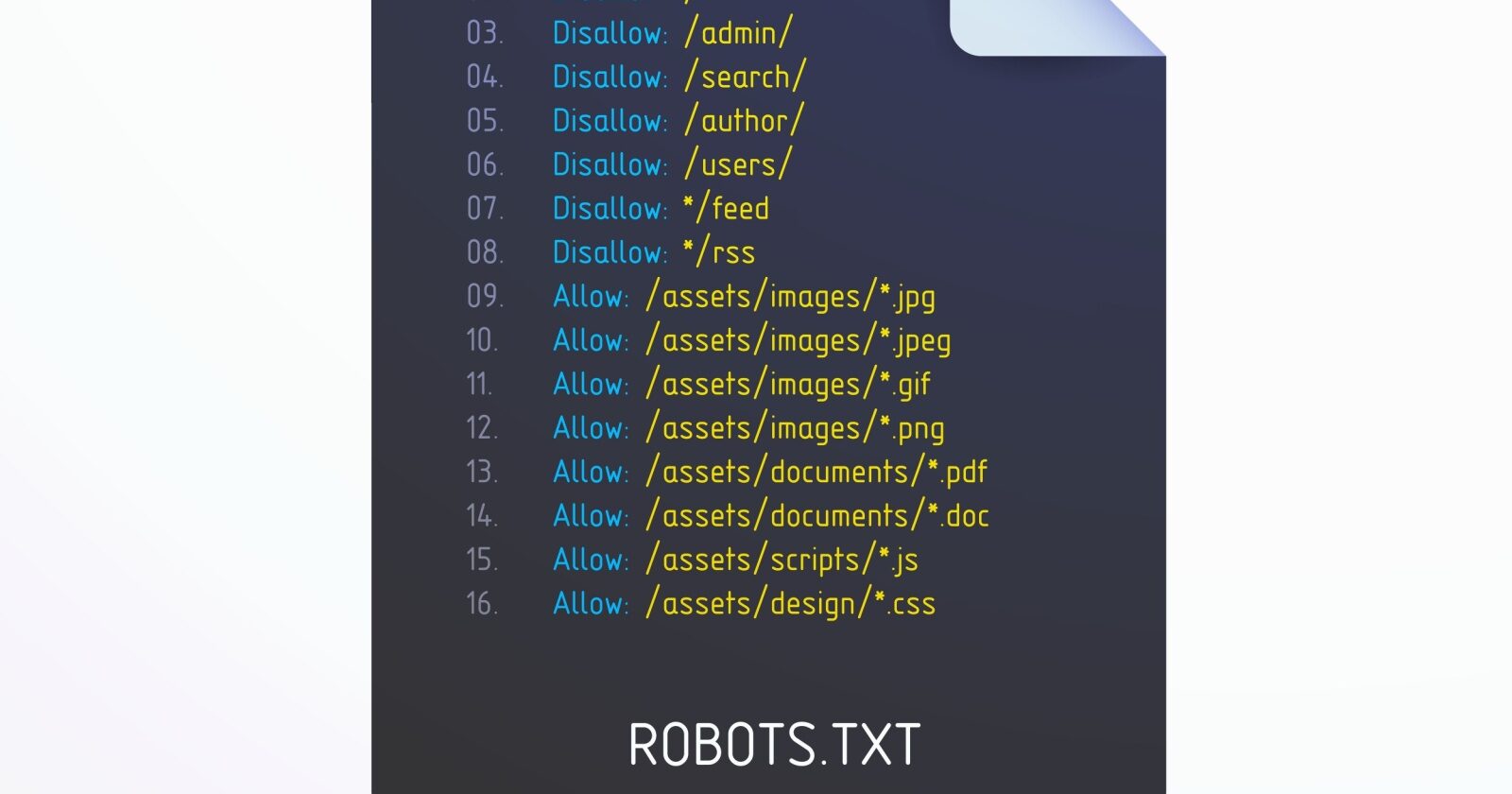

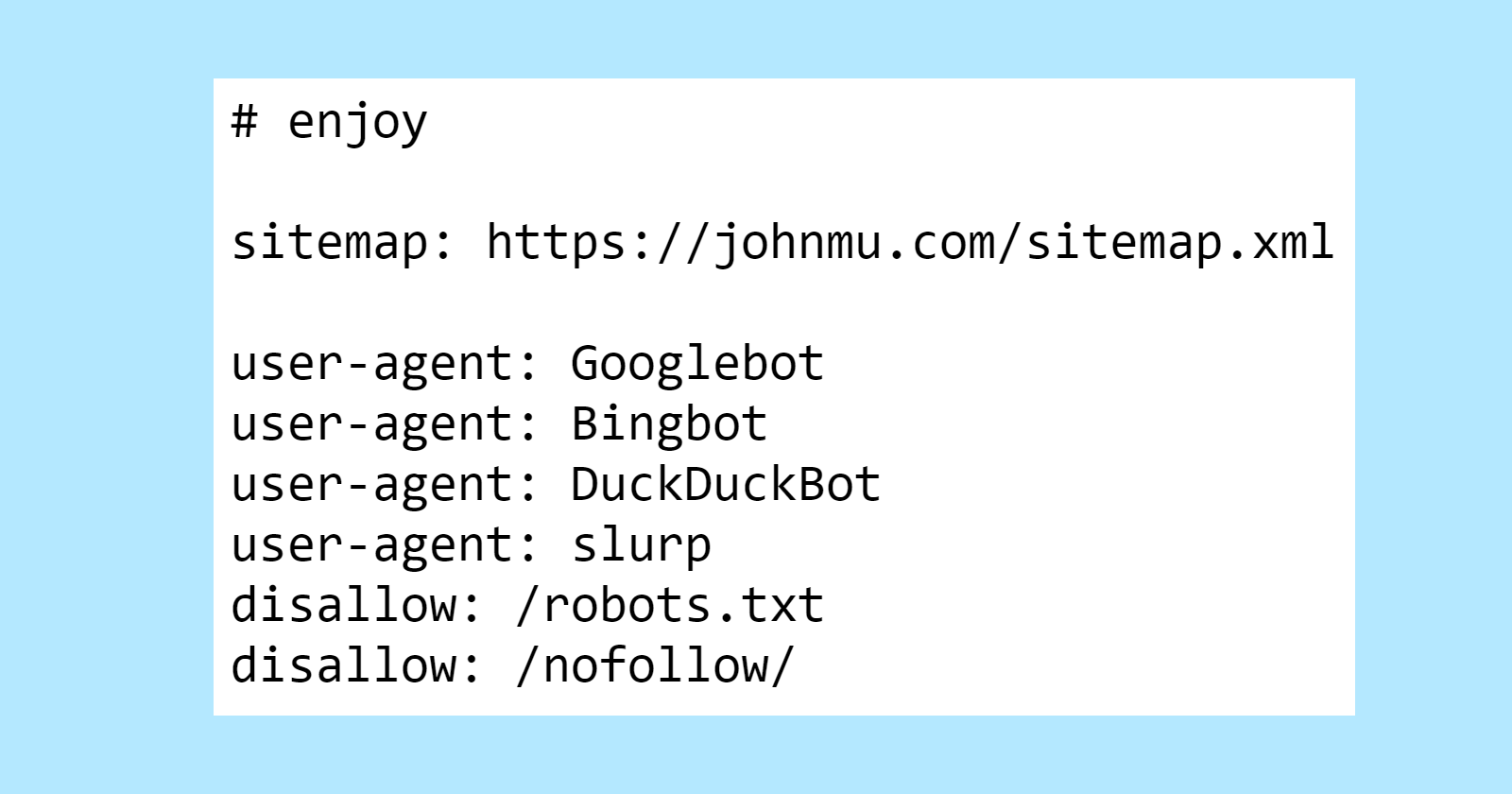

Google removes robots.txt guidance for blocking auto-translated pages. This change aligns Google’s technical documents with its spam policies. Google removed guidance advising websites to block auto-translated pages via robots.txt. This aligns with Google’s policies that judge content by user value, not creation method. Use meta tags like “noindex” for low-quality translations instead of sitewide exclusions. … Read more